By Mayank J

The 200 MB Model

This may not be an exhaustive guide to optimizing Excel workbooks, but I’m sharing key findings from a recent experience working on a 200 MB Excel-based financial model.

Background: We were tasked with building a data-intensive monthly financial model spanning 6 years, handling over 100,000 rows of sales data. The use of long-range formulas across such a dataset made the workbook painfully slow to calculate.

We decided to challenge some of the conventional financial modelling best practices, just to see if they could be bent (carefully!) in favor of performance.

The top 4 adjustments that significantly improved our model’s performance:

Why performance matters: In today’s world, it’s no longer just about file size, Excel calculation speed is the real bottleneck. Even a 50 MB workbook can be frustrating if it takes minutes to recalculate or respond.

Before diving into the four methods above, start with this quick optimization checklist:

Once these basics are addressed, and if performance is still an issue, here are the advanced techniques we used:

Final Thoughts:

Yes, sometimes you have to bend best practices to suit real-world performance needs. But do so consciously. Always weigh usability vs. transparency.

📌 Have you faced similar Excel challenges? Share your experiences in the comments, we'd love to hear your solutions!

Background: We were tasked with building a data-intensive monthly financial model spanning 6 years, handling over 100,000 rows of sales data. The use of long-range formulas across such a dataset made the workbook painfully slow to calculate.

We decided to challenge some of the conventional financial modelling best practices, just to see if they could be bent (carefully!) in favor of performance.

The top 4 adjustments that significantly improved our model’s performance:

- Using OFFSET selectively

- Applying daisy chaining in formulas

- Hardcoding calculated values using macros (with a toggle to restore formulas)

- Separating input sheets based on classification

Why performance matters: In today’s world, it’s no longer just about file size, Excel calculation speed is the real bottleneck. Even a 50 MB workbook can be frustrating if it takes minutes to recalculate or respond.

Before diving into the four methods above, start with this quick optimization checklist:

- ✅ Check and reset the last used cell across all sheets

- ✅ Remove external links

- ✅ Avoid conditional formatting in large models

- ✅ Delete unnecessary objects and confirm no hidden objects remain

- ✅ Set calculation mode to Manual

- ✅ Save your file as .xlsb instead of .xlsx/.xlsm , this alone can save 50%+ in size

- ✅ Check for and remove any circular references

- ✅ Ensure there are no hidden sheets, rows, or columns

- ✅ Review named ranges and data validation to eliminate long/invalid entries

Once these basics are addressed, and if performance is still an issue, here are the advanced techniques we used:

- Use of OFFSET

OFFSET is typically discouraged due to tracing issues as it's hard to track precedents and maintain transparency. But in high-volume data models, it can offer massive gains.

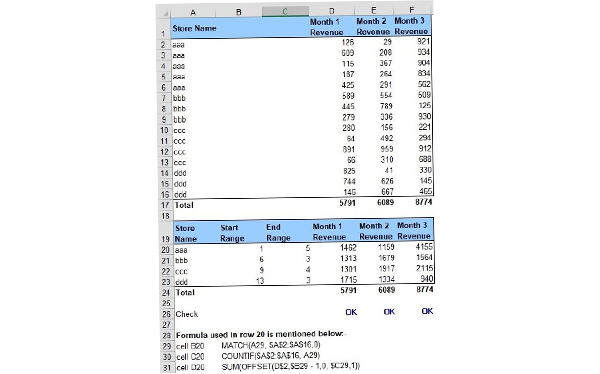

For example, if you're using SUMIF over 100,000 rows, Excel evaluates every cell for a match. However, if you know the rows for each condition, you can sort data and use OFFSET to directly sum the relevant block thereby skipping 99,000 irrelevant checks.

Imagine you have 10 stores, each with 1,000 products. You want monthly revenue sums for each store across 6 years. Instead of filtering every row each time, use OFFSET to jump directly to each block.

This dramatically improves performance. In our case, inserting rows went from 2 minutes to 1 second. - Daisy Chain Linking

Best practices suggest referencing original source cells, and rightly so, since `Ctrl + [` jumps directly to the source.

But when you're linking 10,000+ cells to one source, Excel slows down, especially when inserting/deleting rows. Each reference has to be adjusted.

With daisy chaining, you only update a handful of links at a time. This reduces Excel’s recalculation burden, making it faster to edit sheets , even though it sacrifices some traceability. - Hardcode Calculated Values with Macro

This one needs a bit of VBA. When you're dealing with 100,000 rows × 72 columns = 7.2 million cells, recalculation can be painfully slow.

In our case, we automated a macro that:- Replaces calculated results with values during most of the time

- Temporarily restores formulas from a stored version when required

This reduced our file from 200 MB to 50 MB, with a noticeable boost in speed and responsiveness. - Separate Inputs into Multiple Sheets

Models often consolidate inputs into a single sheet for audit convenience. But for datasets this large (revenue, cost, quantity, price, tax for 100,000+ items), it’s impractical.

We split inputs across multiple sheets, one for revenue, one for cost, one for quantity, etc.

Then we created a Global Inputs Index sheet that links to all individual inputs. This makes inputs easier to manage, navigate, and update without bloating any single sheet.

Final Thoughts:

Yes, sometimes you have to bend best practices to suit real-world performance needs. But do so consciously. Always weigh usability vs. transparency.

📌 Have you faced similar Excel challenges? Share your experiences in the comments, we'd love to hear your solutions!

3 Comments

Anurag Kushwaha

XSLB is a binary format, saves space and increases calculation speed.

James Carter

Didn't know .xlsb could save that much space! Switching now!

Emily Thompson

Thanks for highlighting performance over size. I've been guilty of focusing too much on MBs and not enough on calculation lag. After trying .xlsb and removing unnecessary formatting, my model performance improved drastically. Looking forward to trying the OFFSET and modular input techniques next!